Rome – Jun 10, 2025

Translated, a leader in AI-powered language solutions, today announced a major milestone for its translation AI, Lara, made possible through close collaboration with Lenovo, a global leader in high-performance computing. Built for high-volume production environments, Lara now delivers what was once considered a tradeoff: the fluency and reasoning of an LLM, and the low hallucination of machine translation, both now delivered with near-instant responsiveness.

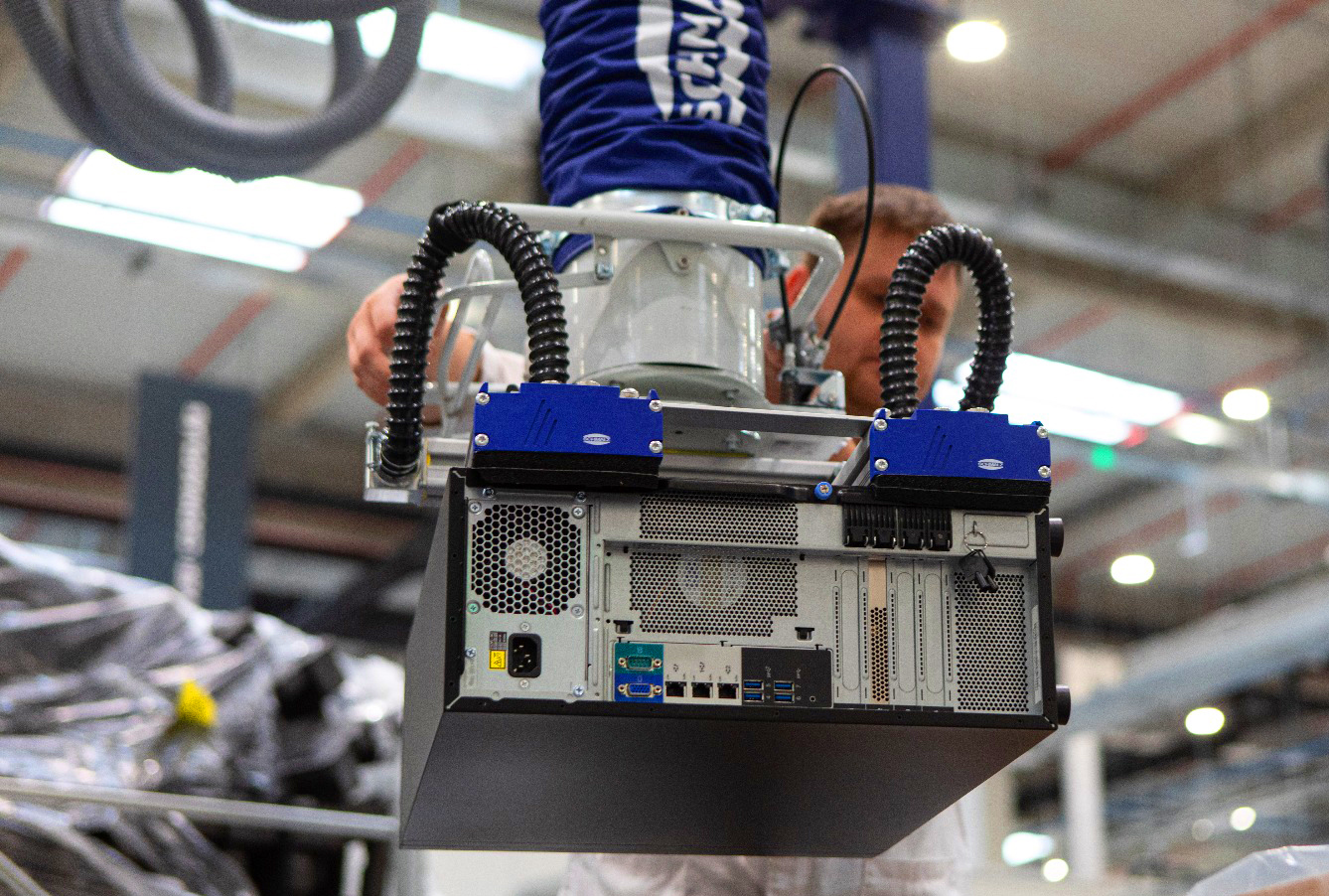

To achieve this result, Translated co-designed a new hardware solution with Lenovo, purpose-built for translation, and developed an innovative decoding system to fully leverage the latest chips. Optimized for latency-critical scenarios like live chats, trading, and news, Lara now achieves sub-second P99 latency across the 50 most widely spoken languages. This breakthrough sets a new standard for high-quality, low-latency translation and enables new cost-efficient applications, such as only translating the portion of content needed upfront, while processing the rest on demand. Lara is now 10 to 40 times faster than leading LLMs in translation tasks, while delivering higher quality, making it a perfect fit for modern business workflows.

To obtain this outcome, Lenovo provided ThinkSystem servers powered by NVIDIA’s GPUs, the world’s most advanced processors for AI workloads. Each server supports eight of the latest high-speed, interconnected GPUs, powering advancements in AI, including large language models, machine learning, model training, and high-performance computing. Through intense co-design work, Translated and Lenovo were able to optimize their architecture for the translation task. ThinkSystem servers were installed in two data centers in Washington and California, strategically positioned near major internet hubs to keep network latency between Lara and the main internet backbones under one millisecond.