From MT to LLM

In 2017, researchers in the field of natural language processing invented a new technology called the Transformer to tackle the hardest problem in artificial intelligence: translation. This technology was first developed and used for Neural Machine Translation (NMT), a task-specific AI model that is (typically) pre-trained only for translation. Transformers subsequently evolved and became a core part of generative AI models - today’s LLMs. These foundational AI models are pre-trained for a wide range of natural language tasks and have been found to be able to translate at quite a good level.

Use of LLMs in translation

Early experience has shown that LLMs can perform surprisingly well at translation, given that they weren't specifically designed for it. In many cases, they can compete with NMTs, especially on noisy input like user-generated content. However, this strong performance has been restricted to a smaller set of highly-resourced languages.

LLM- vs NMT-Based Enterprise Translation Solutions

After data security and system integration, quality, latency, price and languages supported are the most important operational decision criteria for enterprise customers when purchasing automated translation.

To understand LLM's suitability for enterprises, the following NMT- and LLM-based solutions have been tested with typical and specific "enterprise content" as well as widely available public content.

• Google Translate API: a major public NMT system

• DeepL Pro API: a major public NMT system

• GPT-4o: a leading foundational LLM

• Lara by Translated: an experimental LLM specifically fine-tuned for translation

For reference:

GPT-4o was configured using 5-shot plus RAG (equivalent to using a TM for adaptivity), with source content for context.

Lara uses expert-design prompts plus RAG with source content for context.

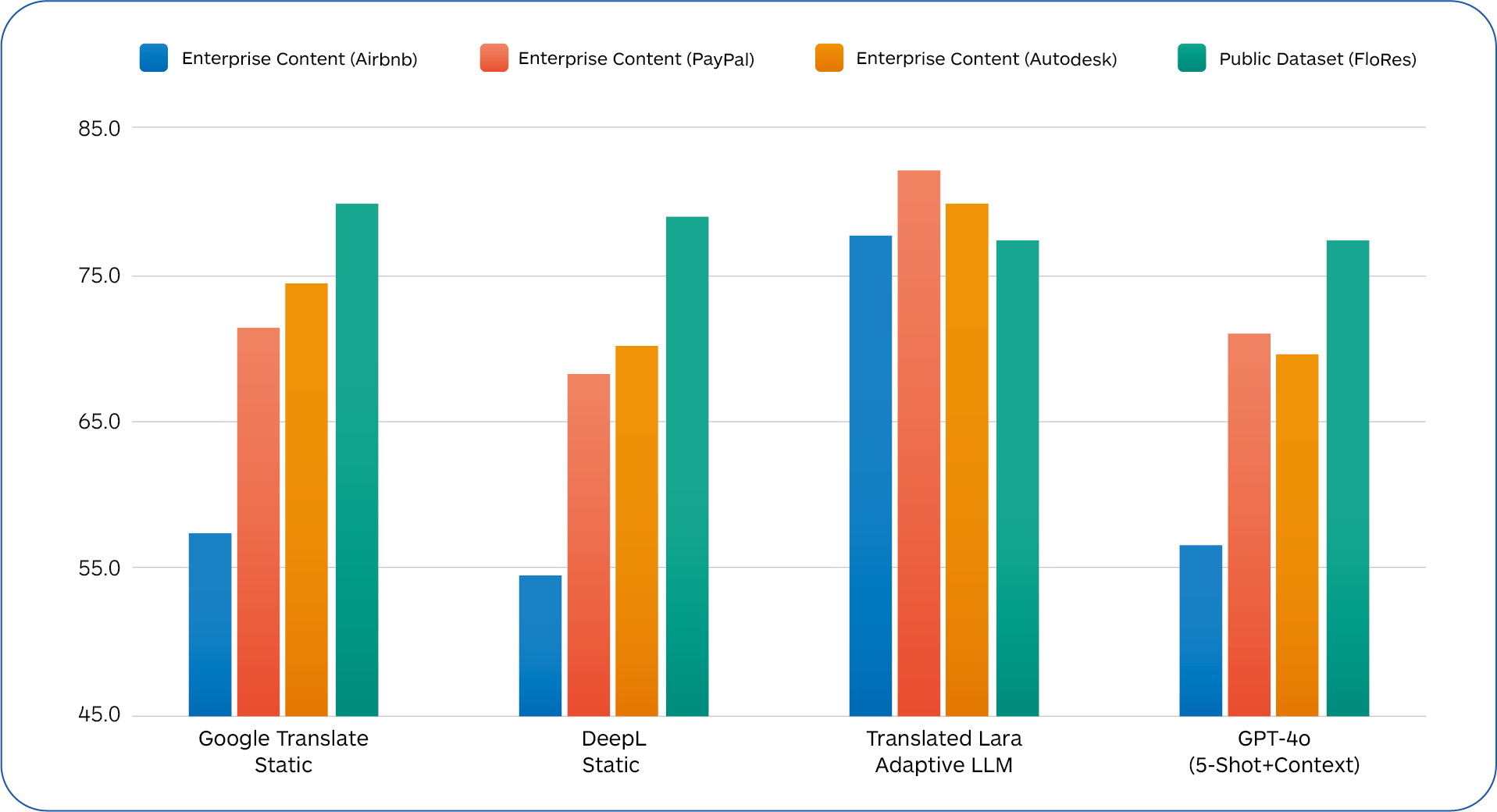

Quality - Comet Scores

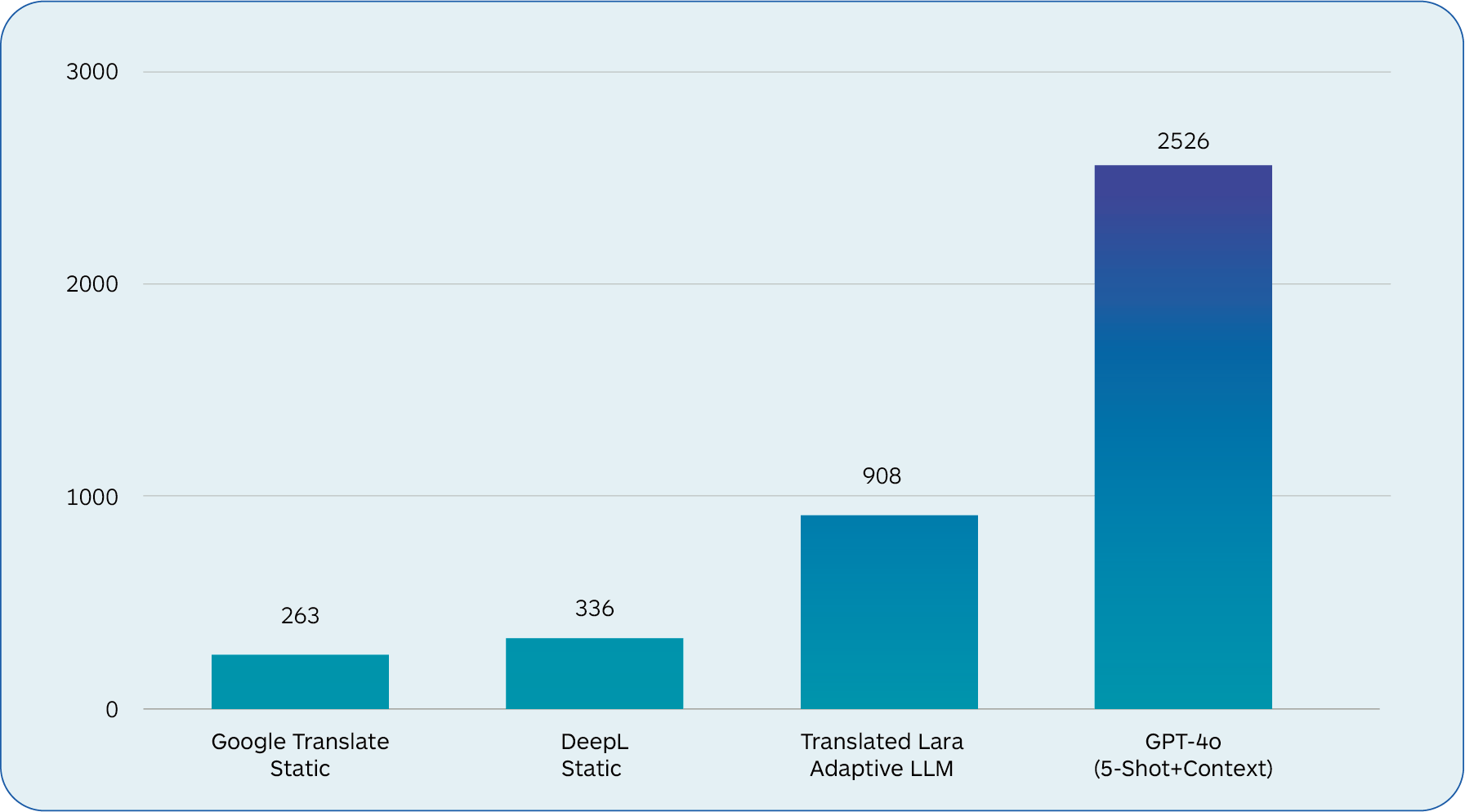

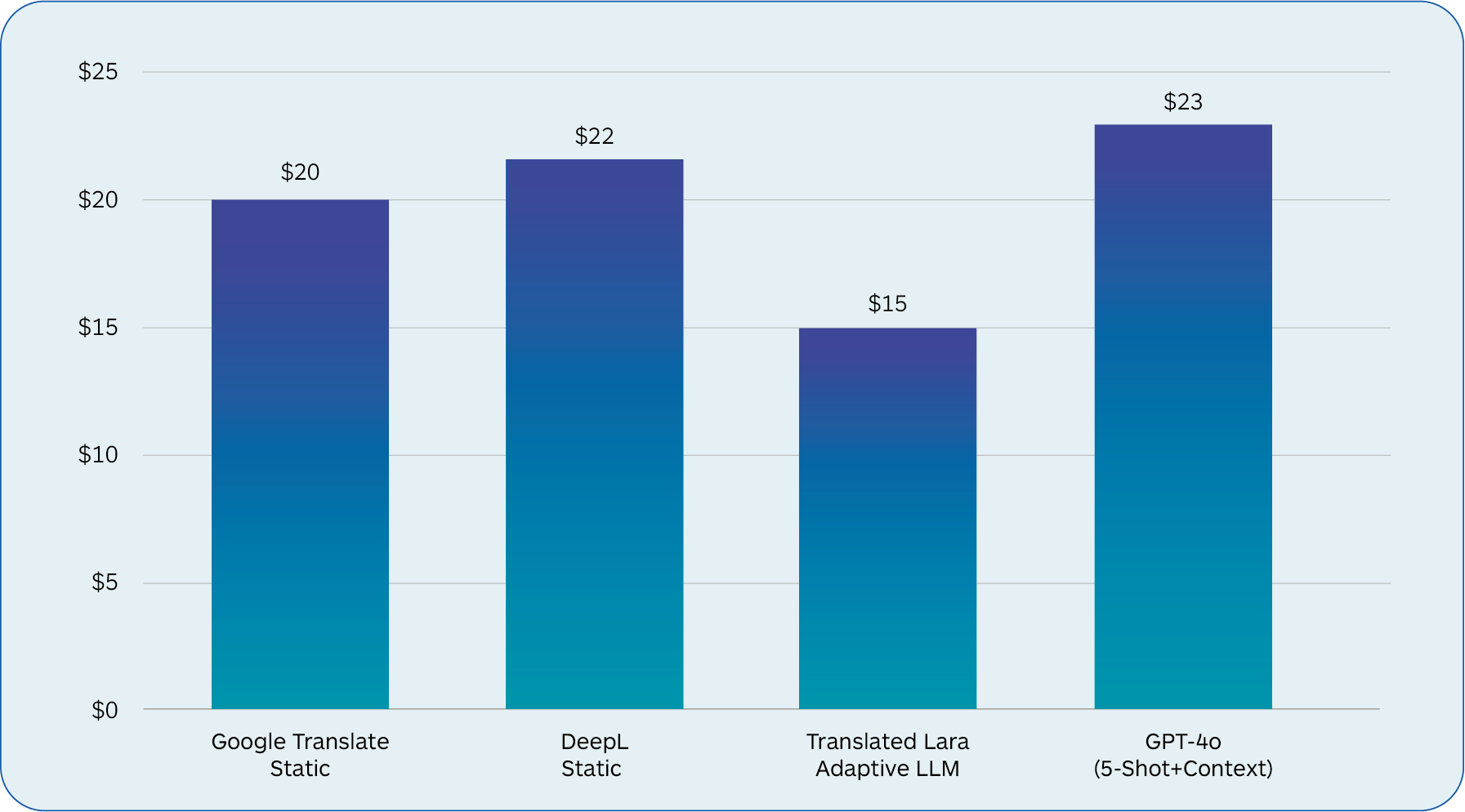

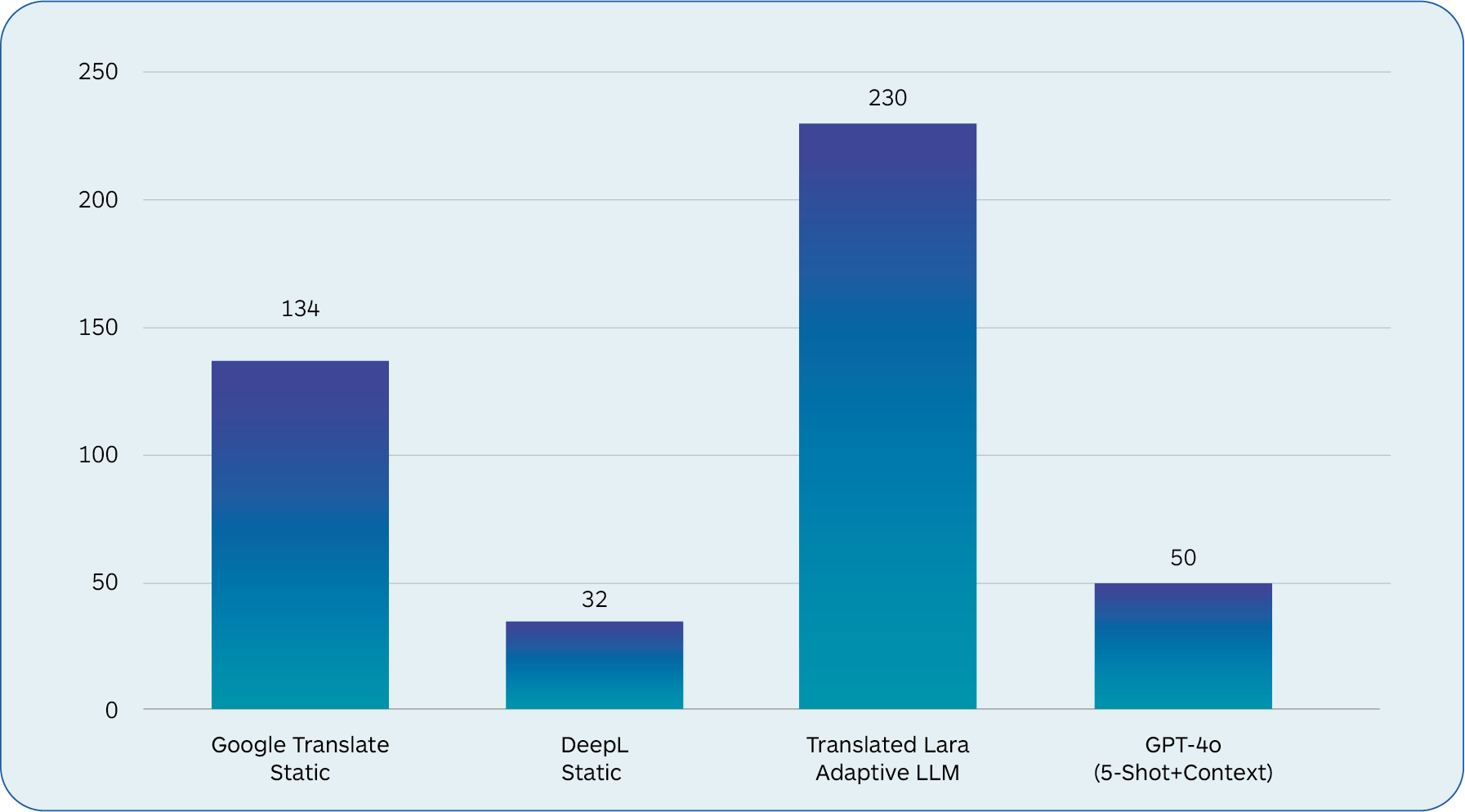

• When trained with a focus on adaptivity, LLMs can outperform state-of-the-art NMT systems in high-resource languages, especially when translating into English. Quality drops significantly in specialized fields like Legal or Healthcare. • LLMs produce more fluent text but struggle with accuracy, being more prone to semantic errors and hallucinations. Their responses cannot be easily directed or controlled because LLMs are sensitive to cues. • LLMs have a better sense of context at the document level. But they falter with larger or implicit context. • LLMs can quickly adapt to new tasks with minimal examples and align translations with the desired tone of voice, potentially reducing post-editing costs and improving translation quality through automated evaluation and post-editing tasks. • LLMs suffer from high latency, making them less suitable for high-volume and real-time production translation workflows. However, latency can be significantly improved by tuning an LLM specifically for a translation task. • NMTs are 25x faster than LLMs. There are many initiatives to reduce inference costs, the most ambitious of which is 8 times faster than competitors, but still not as fast as an NMT. • Although costs are starting to come down, LLMs are generally more expensive. However, an LLM fine-tuned to specific translation tasks and provided with expert-design prompts can be more convenient than MT. • LLMs are more expensive to train and operate, so retraining can’t be done frequently. Non-English interactions incur higher costs due to tokenization being tailored to English. • Token-based pricing is more difficult to estimate, understand, and plan around. • LLMs perform better with language variants, but traditional NMT systems offer broader language coverage. • Due to high training costs, progress in supporting lower-resource languages will take time for LLMs.

Latency

Costs

Languages Supported

Data & Security

• LLMs must handle large and diverse data sets, requiring more robust encryption and transparent data handling practices to protect sensitive localization content and comply with privacy regulations.

• While NMT systems present a stable threat landscape, LLMs face evolving threats and higher risks of misuse, requiring advanced cybersecurity measures.

• There are growing concerns about the scarcity of high-quality training data, and the compensation of copyright holders for training data.

Integration

• Integrating LLMs into enterprise workflows presents challenges due to high computational requirements, the need for specialized fine-tuning, and complex implementation processes.

• Current models are more intricate than traditional NMT systems, often requiring human oversight to manage bias, discrimination, and data privacy concerns.

• Efforts to reduce model complexity and frequent updates are costly, necessitating strategic long-term decisions for robust and efficient solutions.

Final considerations

LLMs like GPT-4o have higher costs and latency compared to traditional NMT models. A custom LLM fine-tuned for a translation task, powered by expert-trained prompts, and provided with translation memories and source context, such as Translated’s Lara, performs best in cost, quality, and latency. It will generate cost savings in production enterprise settings as it will require less human oversight and corrective efforts.

Expected Availability

• Task-specific LLMs for translation, at enterprise production standards, are expected by the end of 2024 for the top 10 high-resource languages.

• A hybrid configuration may offer the best ROI during the next 12-18 months.

Download the White Paper