Introducing Lara

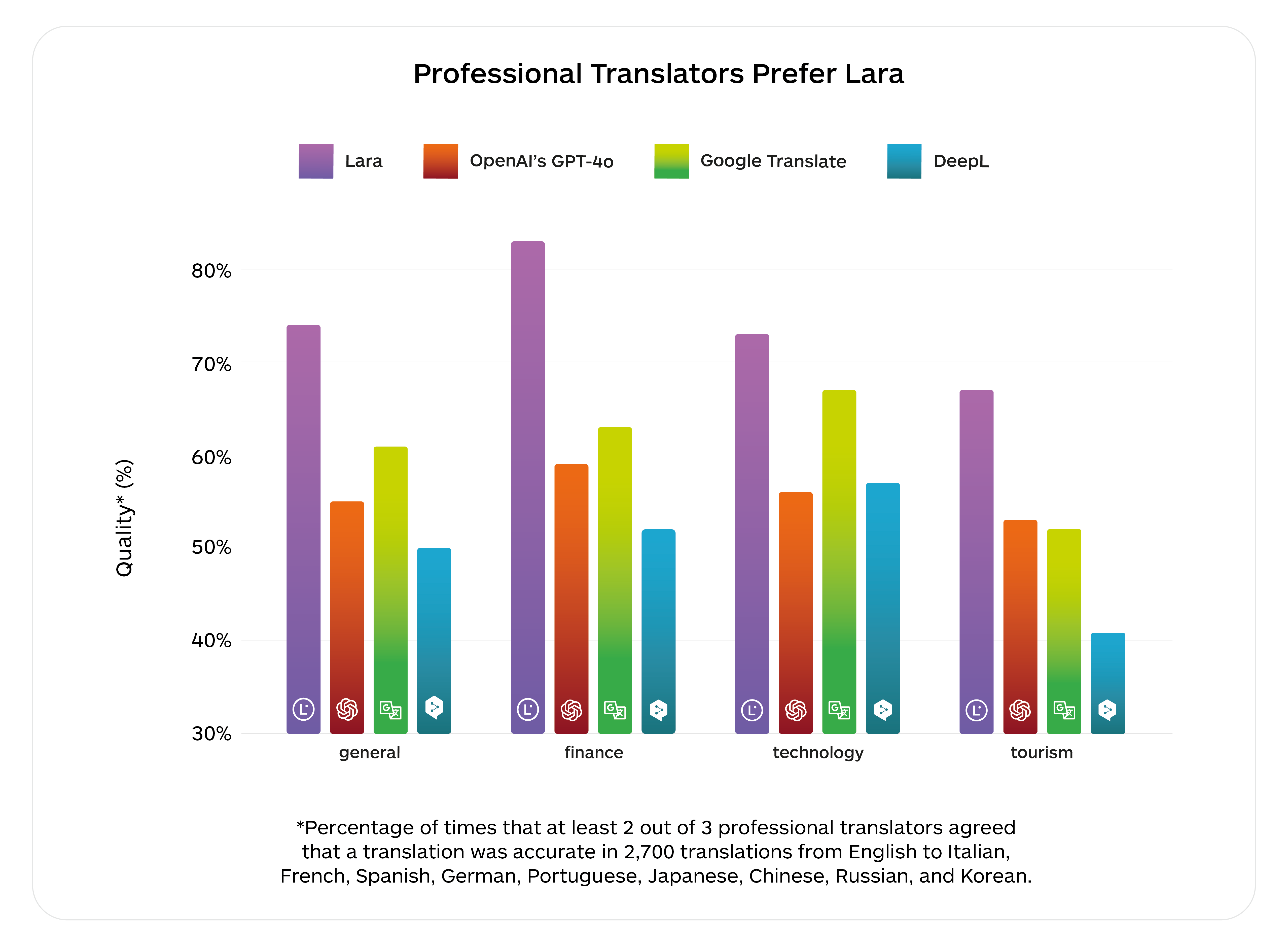

Our CEO, Marco Trombetti, introduced Lara, the culmination of over 15 years of machine translation research. In 2011, we pioneered adaptive MT. Since 2017, we've powered our neural MT system with the Transformer model, which was invented for translation and later became foundational to generative AI. When large language models gained popularity following the release of ChatGPT, many were amazed by their fluency and ability to handle large contexts—but were also frustrated by their lack of accuracy. We were already working tirelessly to combine the power of large language models with the accuracy of machine translation. Today, we're proud to have reached this milestone of combining both with Lara, the world's best translation AI.

Lara redefines machine translation by explaining its choices, leveraging contextual understanding and reasoning to deliver professional-grade translations users can trust. It is trained on the largest, most curated dataset of real-world translations globally available. Lara was trained on the NVIDIA AI platform using 1.2 million GPU hours thanks to our long-term collaboration with NVIDIA.

With Lara, companies can tackle previously unimaginable localization projects. Translators and multilingual creators will enjoy Lara in their daily work, achieving new levels of productivity and accuracy.

Lara's error rate is just 2.4 per thousand words. In 2025, we're aiming even higher—planning to harness 20 million GPU hours to approach language singularity further.